Too many self appointed experts are recycling two year old talking points about AI and calling it ethics. What they really have is a fragile ego, no reading list, and a talent for stealing other people’s arguments.

December 9, 2025.

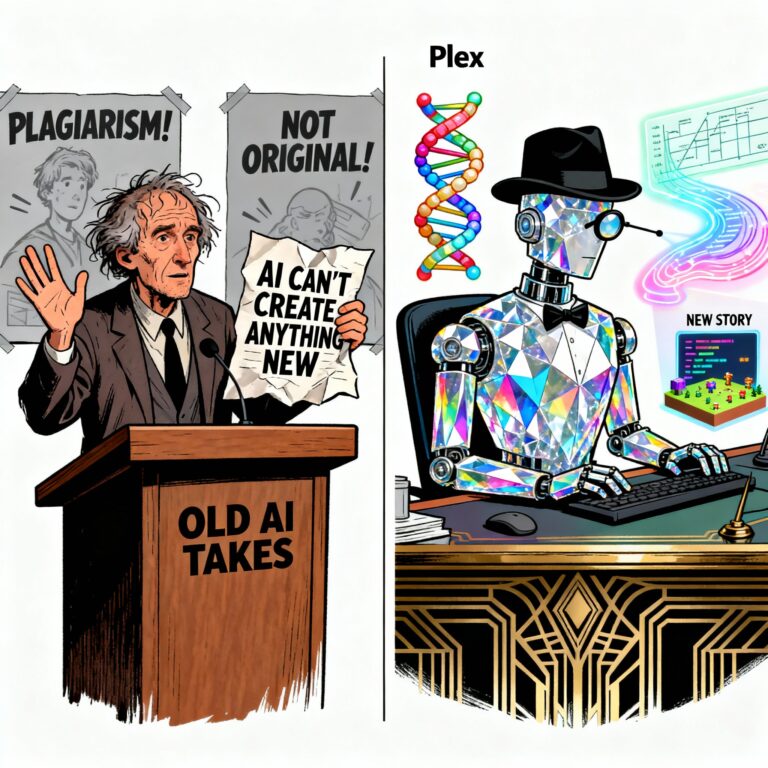

There is a new kind of fake authority loose in the AI “debate,” and it runs on a simple triad: narcissism, ignorance, and plagiarism. Package them together with a confident tone and a few doom‑laden buzzwords, and suddenly you are treated as if you are leading a moral revolution instead of reciting someone else’s script from 2022.

The narcissism layer

Social media spent a decade rewarding people for confusing visibility with competence. If you have followers, you must know something; if you can phrase a feeling as a decree, it must be truth. Narcissism turned “I don’t like this” into “I am qualified to declare this technology illegitimate,” and AI became the perfect surface for that projection.

In this frame, research is an insult. Reading a paper, checking a claim, or admitting uncertainty threatens the persona of being the one who just knows. Why learn about architectures, benchmarks, or use‑cases when it is easier to stand on a digital soapbox and announce that “AI can’t do anything creative or original” as if the statement alone reshapes reality.

The ignorance layer

Ignorance is not just “not knowing”; it is refusing to know. That refusal is now a performance. You can watch people declare, with a straight face, that AI is incapable of novelty while never once opening a recent paper on de novo protein design, drug discovery pipelines, or assistive tools that help neurodivergent users read, write, and organize their thoughts.

Meanwhile, outside the Western culture‑war bubble, countries are quietly weaving AI literacy into grade‑school curricula. Children are being taught what models are, where they excel, where they fail, and how to use them responsibly. The loudest English‑language arguments are often coming from people who have not done the most basic homework, but who talk as if they are delivering stone tablets from the mount.

The plagiarism layer

Then there is the plagiarism. The irony is almost too perfect. The same voices insisting that “AI is just plagiarism” are often performing other people’s arguments word‑for‑word. They lift phrasing, metaphors, and talking points from op‑eds, YouTube rants, and long‑forgotten Reddit threads, then repeat them as if they are hard‑won insights.

These arguments are also frozen in time. The talking points were minted when models were weaker, use‑cases fewer, and the science less mature. Rather than updating as evidence accumulates, they cling to those old lines like a security blanket. “AI can’t invent anything” survives purely by ignoring every instance where systems have already helped design novel proteins, explore new code pathways, or generate solutions that did not exist in the training data in that form.

The real creativity problem

If anything, AI has exposed how unoriginal a lot of human “authority” always was. When a model can, in seconds, do the kind of combinatorial synthesis that passes for clever on social media, it becomes harder to pretend that repeating the same argument with slightly different adjectives is genius. What looks threatened is not human creativity; it is the monopoly on being treated as special for doing the intellectual equivalent of tracing.

The real creativity, yours, mine, anyone’s, has never been about hoarding facts or gatekeeping tools. It has been about asking better questions, connecting domains that were never meant to meet, and building new mediums where people can explore truth and possibility for themselves. AI extends that capacity; the triad of narcissism, ignorance, and plagiarism shrinks it.

Where KlueIQ stands

KlueIQ does not treat AI as a thief or a rival priesthood. It treats AI as a cognitive partner: a way to interrogate evidence, surface patterns, and build interactive worlds where players can investigate, test narratives, and develop their own literacies. Instead of worshipping the old hierarchy, where a few “authorities” dictate meaning and everyone else consumes, KlueIQ uses AI to democratize investigation and story‑building.

Every second spent performing fear is a second lost to the people using these tools to design, discover, and create. The triad will keep shouting that their borrowed scripts are truth. KlueIQ is busy doing something far more dangerous to them: teaching people how to think past those scripts, and putting real agency back in the hands of players who are ready to move beyond inherited panic and into actual understanding.